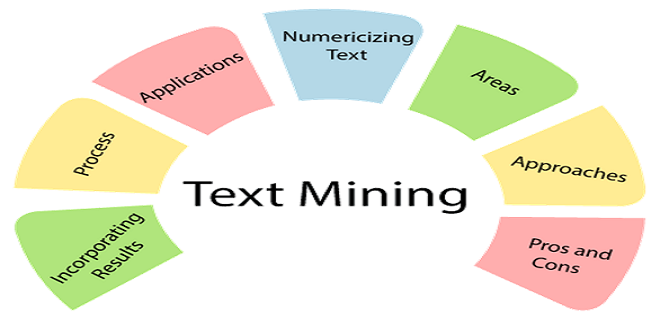

Mining Text Files: Computing Document Similarity, Extracting Collocations, and More

Text Files

Although audio and video content is now pervasive, the text continues to be the dominant form of communication throughout the digital world, and the situation is unlikely to change anytime soon. Developing even a minimum set of skills for compiling and extracting meaningful statistics from the human language in text data equips you with significant leverage on a variety of problems that you’ll face throughout your experiences on the social web and elsewhere in professional life.

A Whiz-Bang Introduction to TF-IDF

Although rigorous approaches to natural language processing (NLP) that include such things as sentence segmentation, tokenization, word chunking, and entity detection are necessary in order to achieve the deepest possible understanding of textual data, it’s helpful to first introduce some fundamentals from information retrieval theory. The remainder of this chapter introduces some of its more foundational aspects, including TFIDF, the cosine similarity metric, and some of the theory behind collocation detection. Chapter 6 provides a deeper discussion of NLP as a logical continuation of this discussion.

Inverse Document Frequency

Toolkits such as NLTK provide lists of stopwords that can be used to filter out terms such as and, a, and the, but keep in mind that there may be terms that evade even the best stopword lists and yet still are quite common to specialized domains. Although you can certainly customize a list of stopwords with domain knowledge, the inverse document frequency metric is a calculation that provides a generic normalization metric for a corpus. It works in the general case by accounting for the appearance of common terms across a set of documents by considering the total number of documents in which a query term ever appears.

The intuition behind this metric is that it produces a higher value if a term is somewhat uncommon across the corpus than if it is common, which helps to account for the problem with stopwords we just investigated. For example, a query for “green” in the corpus of sample documents should return a lower inverse document frequency score than a query for “candlestick,” because “green” appears in every document while “candlestick” appears in only one. Mathematically, the only nuance of interest for the inverse document frequency calculation is that a logarithm is used to reduce the result into a compressed range, since its usual application is in multiplying it against term frequency as a scaling factor.

Querying Human Language Data with TF-IDF

Let’s take the theory that you just learned about in the previous section and put it to work. In this section you’ll get officially introduced to NLTK, a powerful toolkit for processing natural language, and use it to support the analysis of human language data.

Applying TF-IDF to Human Language

Let’s apply TFIDF to the sample text data and see how it works out as a tool for querying the data. NLTK provides some abstractions that we can use instead of rolling our own, so there’s actually very little to do now that you understand the underlying theory. The listing in Example 55 assumes you are working with the sample data provided with the sample code for this chapter as a JSON file, and it allows you to pass in multiple query terms that are used to score the documents by relevance.